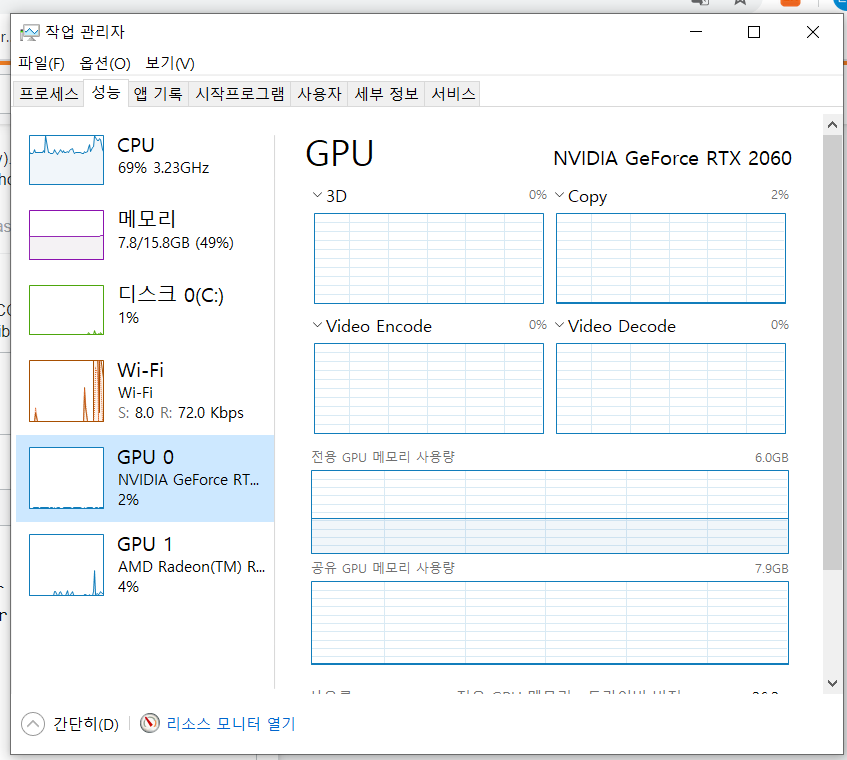

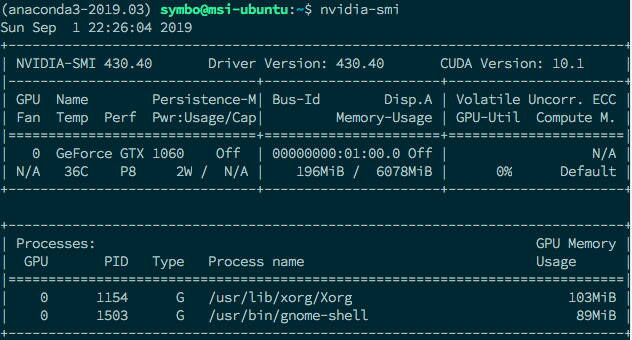

Use GPU in your PyTorch code. Recently I installed my gaming notebook… | by Marvin Wang, Min | AI³ | Theory, Practice, Business | Medium

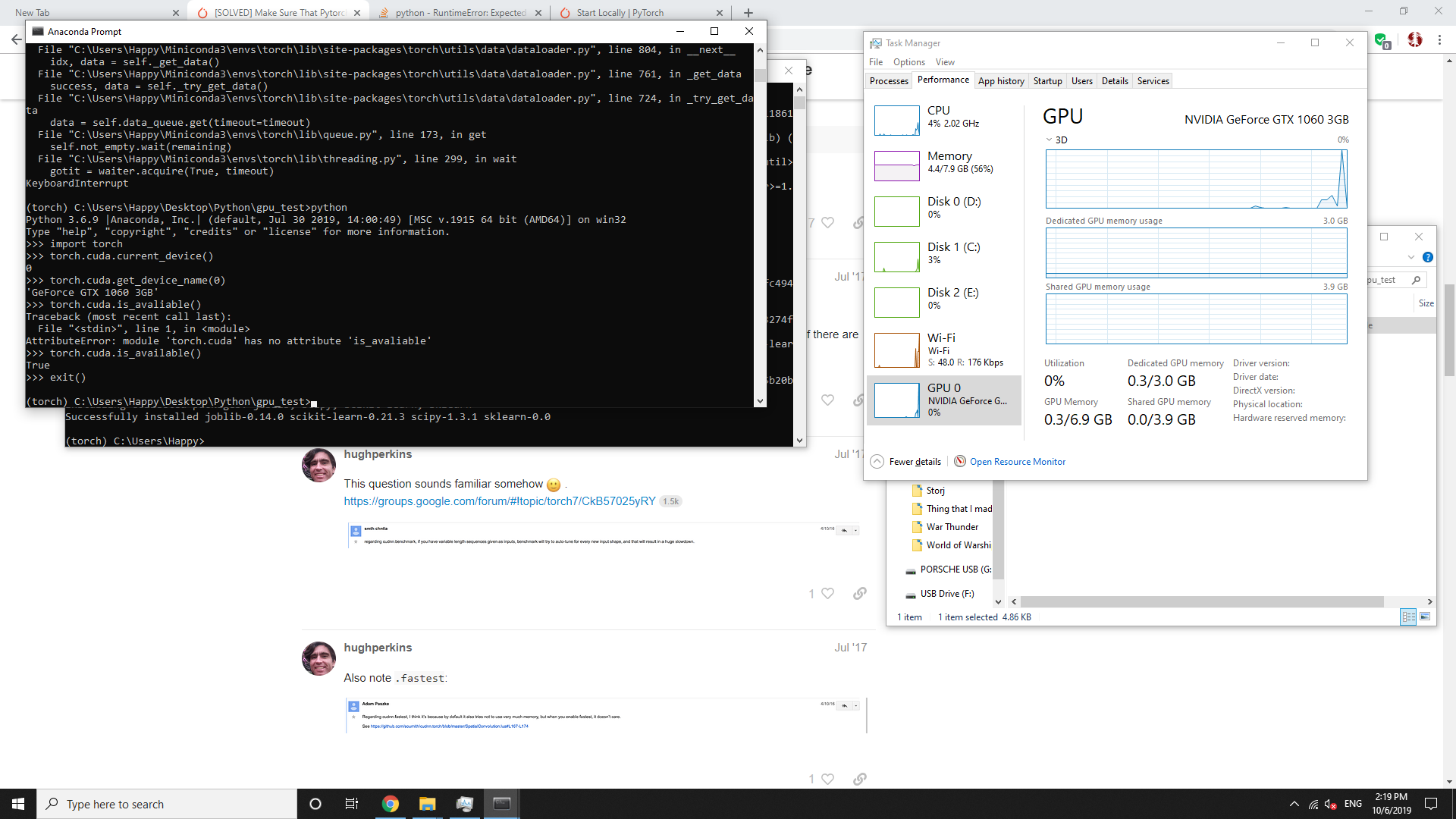

Pytorch is installed successfully, but the GPU function cannot be used: pytorch no longer supports this GPU CUDA error: no kernel image is available

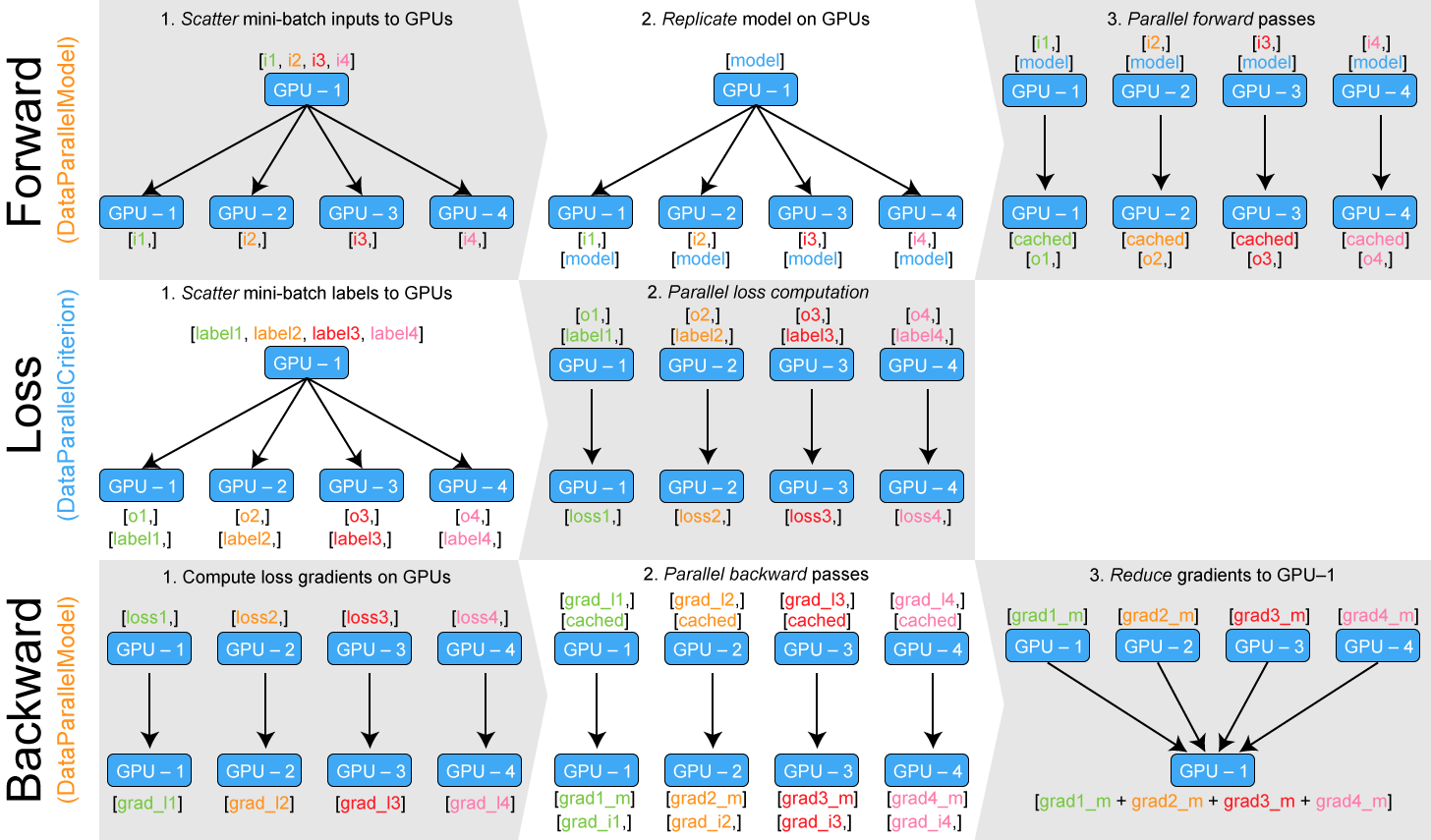

How distributed training works in Pytorch: distributed data-parallel and mixed-precision training | AI Summer

PyTorch in Ray Docker container with NVIDIA GPU support on Google Cloud | by Mikhail Volkov | Volkov Labs

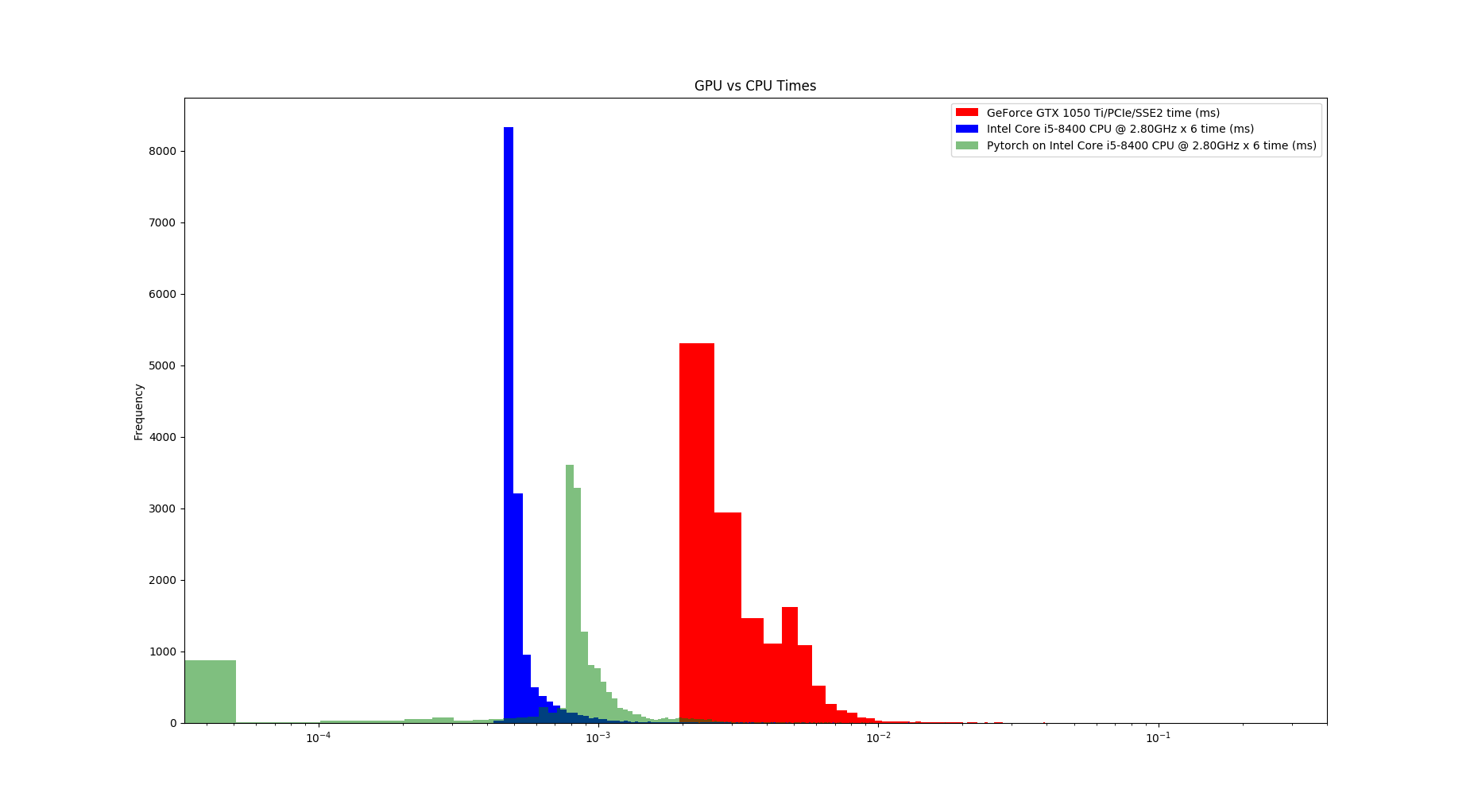

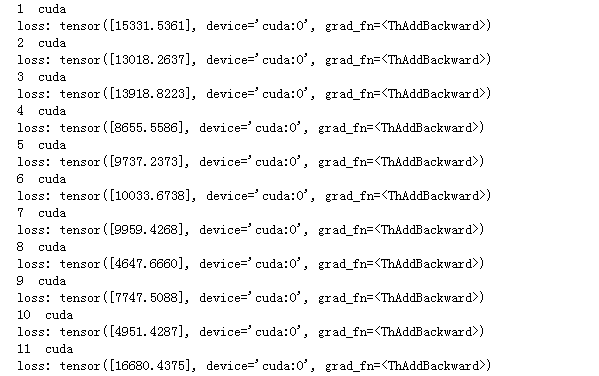

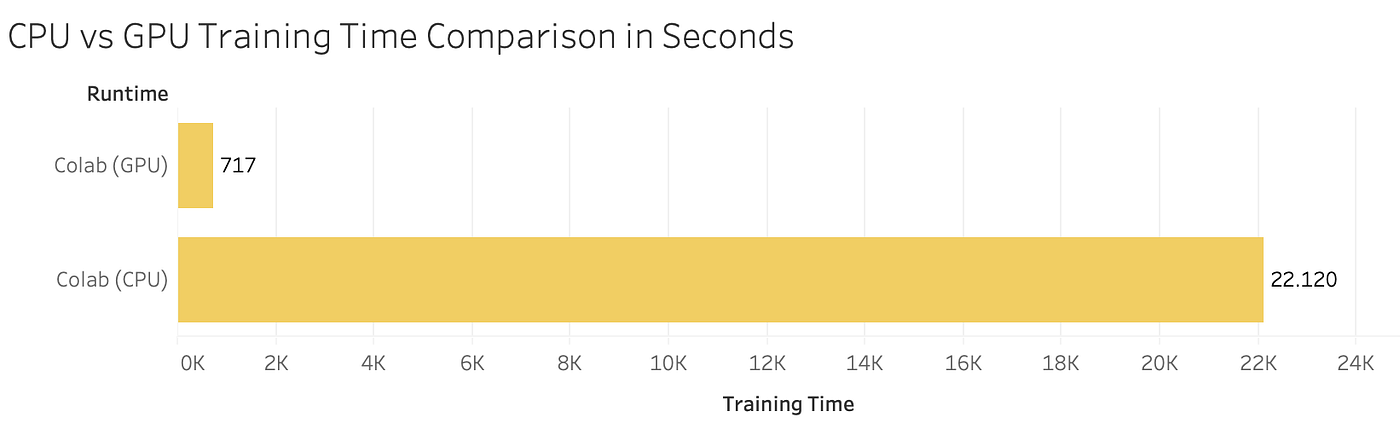

PyTorch: Switching to the GPU. How and Why to train models on the GPU… | by Dario Radečić | Towards Data Science

Use GPU in your PyTorch code. Recently I installed my gaming notebook… | by Marvin Wang, Min | AI³ | Theory, Practice, Business | Medium